SQL Server 2014 contains some interesting new features. Although SQL Server Standard Edition is limited to 128GB of memory, teams deploying on Standard Edition have an option to fit more of their working set in low latency storage – SQL Server Buffer Pool Extensions.

How SQL Server Normally Deals With Data

During SQL Server’s normal operations, data is read from disk into memory. At this point, the data is clean. Once the data is changed, it is marked as dirty. Eventually the dirty pages are written to disk and marked as clean; clean pages may be flushed from memory when the data cache (the buffer pool) comes under pressure. At this point the data is gone from memory and SQL Server has to read it from disk the next time a user runs a query that requires the data.

As long as you have less data than you have memory, this isn’t a problem. As soon as you have more data than you have memory, you’re at the mercy of physical storage.

Right now, some of you are probably saying “So what? I have an awesome EMC/3PAR/Hitachi VX6.” You also probably have SQL Server Enterprise Edition and a pile of unused RAM sticks. This blog post isn’t for you. Go away.

The rest of you, the 99%ers, listen up.

Speeding Up Data Access with Buffer Pool Extensions

SQL Server 2014 Buffer Pool Extensions are our new secret weapon against not having enough memory. Like most secret weapons, there’s a lot of hype surrounding Buffer Pool Extensions (BPE).

The idea behind BPE is a lot like the idea behind virtual RAM (better known as swap space): fast, low latency persistent storage is used to replace a portion of memory. In the case of BPE, SQL Server will use the disk space to store clean buffers – specifically BPE will hold unmodified data pages that would have been pushed out of RAM.

To see just how fast this was going to perform, I create a test instance of SQL Server and decided to find out.

Test Methodology

I ran SQL Server through a relatively boring test harness – TPC-C running through HammerDB. The database was created at a scale factor of 444 warehouses – this yields 44GB of data on disk, or near enough. SQL Server 2014 RTM was installed on Windows Server 2012 on an Amazon i2.8xlarge instance. To find out more about how the instance was physically configured, you can check out the instance type details page.

SQL Server was set up in a fairly vanilla way:

- Max degree of parallelism was set to 8

- Max server memory was left alone

tempdbwas given 4 data files located on a local all SSD RAID 0 of four drives- A second all SSD RAID 0 of four drives was reserved for BPE

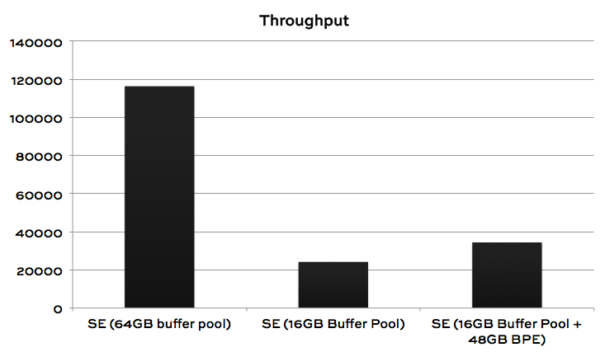

Tests were run multiple times and the results of the first test were discarded – many changes during this process could clear the buffer pool as such, the first test results were assumed to be anomalous. The remaining results were averaged to produce the following chart:

Conclusions

Having an appropriately sized buffer pool was far more effective than allocating considerable space to buffer pool extensions. BPE improved performance by 42.27%. This is not an insignificant performance gain, but BPE is no substitute for memory.

BPE will shine for customers deploying in dense, virtualized environments where memory is constrained but SSD is cheap and plentiful. Given the near ubiquity of SSD, and high speed consumer grade SSD being available for as little as $0.50 per GB, BPE may seem tempting. It may even provide some respite from performance issues. However, BPE is no substitute for RAM.

59 Comments. Leave new

Trying > 64GB BPE would also be worthwhile. Maybe, SQL Server still suffered a cache miss in 25% of the cases which can easily dominate and destroy performance. To have all data cached SQL Server would need to perfectly distribute 16GB data to RAM and 48GB to BPE. That seems unlikely to work out.

Also, I’d like to investigate a little *why* performance is still not much better. These numbers do not mean much because I cannot estimate from them how much my own server would benefit. Maybe there is an easy modification that could be done to speed the BPE version up considerably.

What were the wait stats during the BPE run? How much IO still hit the magnetic disks?

That does sound like a great experiment. You should duplicate the tests and blog what you find out.

I’ve been thinking about this post, and it’s missing something. Many people’s databases are on some type of shared disk, and any load you put on local disk with BPE is load taken off of the shared disks.

For us 1%ers with a good SAN this won’t be enough to get the SAN admin to take our picture off of their dart board since we still need most of their persisted shared disk. However, it will mean they’ll throw darts at that board a little less often as our data files start learning how to play well with others.

Now that I’m looking at it this way I can picture BPE being much more useful, especially for larger databases (>1TB) that can get expensive to hold in memory.

Hi Jeremiah,

EMC has XtremeSF cards that work similarly…

only in this case.. you could either use their drivers, to provide a similar cache… or just install their default drivers, and use the sql buffer pool extension feature, using the XtremeSF cards like the fusionIO cards….

Kind Regards,

andy.

Be cautious here. I have completed extensive testing with XtremeSF caching.

First, as Brent states – there is no substitute for RAM.

Second, some workloads benefit from using XtremeSF to cache frequently read data. The issue I ran into was that shifting the reads onto local storage would mean that a largely write workload was issued to the array. At a minimum Writes have 2 x more impact than reads (4 X with R5 and 6 X with raid 6). Thus is the shared array could perform 100,000 Reads, it can only perform 50,000 writes.

What we experienced was an overall array slowdown using the local caching.

why are we talking of RAM when in the graphic shown they talk about L1 and L2 Cache? can RAM or SSD substitute a fast L2 Cache?

Whichever delightful person who wrote the buffer pool extensions document, is referring to memory as L1 and disk as L2. “This creates a multilevel caching hierarchy with level 1 (L1) as the DRAM and level 2 (L2) as the buffer pool extension file on the SSD.”

In direct answer to your question, RAM and SSD will never break the laws of physics and will, thus, never be a substitute for all 32MB of L2 cache that your processor has on it.

Yeah, they should rather use “tier 1” and “tier 2” to not confuse readers 🙂

I have completely different results with regards to BPE.

System Specs:

12 Cores (X5650)

256GB of Ram Total Server Memory

SQL Server 2014 12.0.2000 with 64GB allocated to it.

I ran one of our larger jobs, and it completed successfully 3 times, all within several minutes of each other – 1 hour 58, 2 hours, and 1 hour 59 minutes. I then decreased SQL Server to 32GB of memory, and added a 128GB local SSD BPE. I attempted to run the job numerous times, and the job failed at different steps.

I then turned off BPE, reran the job at 32GB, and again it ran fine – only running several minutes slower. I set the instance back to 64GB, ran the job again, and it ran in 1:59:30. I renabled BPE and reran and it did complete… but it took nearly 7 hours!

Certainly not the results I was looking for. Any thoughts here?

LeRoy – if you’ve got 256GB of memory, why would you turn down the amount of max memory to below what SQL Server 2014 Standard Edition supports? What are you trying to achieve with your tests?

This is a single instance in an active active clustered environment – so memory is allocated across multiple instances, and free memory is retained for failover purposes.

We are performing testing to potentially move to the cloud, so the thought was that if we are currently running at 64GB, could we reduce our memory requirement and use BPE to still meet SLAs. Real memory is far more expensive at cloud providers than SSD is. This was purely a test to determine the feasibility of using BPE. While I didn’t expect any tremendous difference, I was extremely surprised that my job either fails or has drastically reduced performance when it does run.

LeRoy – you don’t need to leave extra memory for failover purposes. Set the min memory appropriately so that when multiple instances fail over onto the same hardware, the instances can live together temporarily until you get the hardware problem fixed.

About moving to the cloud – unfortunately, you can’t extrapolate on-premise hardware performance to cloud-based virtual server performance. Performance varies dramatically from vendor to vendor. You’ll want to try your tests up in the cloud on the platform you’re considering just as Jeremiah did.

FYI, I just got week 20 of the DBA Training plan (again) and it still says Standard Edition is limited to 64 GB of RAM.

Andrew – ooo, good catch! Fixed.

You produced 44 GB of test data and your test machine i2.8xlarge had 244 GB of Memory. (But in Standard Edition 128 GB of Memory should be used.) You didn’t set max server memory on your SQL Server instance. How come the buffer pool extension made a difference at all? Your data should fit into normal buffer pool anyway.

Because I did set max server memory. I limited the buffer pool to 64GB of the first test, 16GB for the second test, and then tested with 16GB of buffer pool and buffer pool extensions.

And what about sql server clusters, is it possible to use Buffer Pool Extensions in sql server 2014 cluster environment?

In Sql 2012 it is possible to install SSD to each node of a cluster and put tempdb on this SSD.

And if we have sql server 2014 cluster(active passive) how do we configure Buffer Pool Extensions?

And thank you for your fine post.

That’s a great question. I would start by re-asking the question “Should I use buffer pool extensions?”

If you ask me, the answer is a very emphatic “No”.

A single socket server with 4 CPU cores and 384GB of RAM costs $13,000 right now. I know that SSDs are even cheaper, but is it really worth that large of a performance hit?

YB1980 – sure, absolutely. And you can read more in Books Online about how this works as well.

Hi Brent

Are there any advantages to using BPE with a database that already resides on SSDs?

What about using RAMDisk?

Hi Ganesh,

First – Brent didn’t write the post, I did.

Second – Let’s think really carefully about your RAMdisk question – Why would you want to steal RAM from SQL Server and allocate it to a RAM disk instead of just allocating that memory to SQL Server? You will get far better use of memory by using it as memory instead of pretending it’s persistent storage.

If that RAM disk fails, and they do, your SQL Server won’t be able to start back up again because the buffer pool extension isn’t available again. This means your SQL Server is now completely depending on your RAM disk starting up correctly.

Your question about BPE with a database already on SSD sounds like a great experiment you could do. Let me know how that turns out.

Well, I did that test. I had a database on SSD and I ran tests with and without BPE. I used HammerDB for the tests. It turned out that the system is even a bit slower with BPE. No big surprise if you really think about it.

Yeah, even PCI-Express SSD vendors couldn’t make a realistically sized SQL Server workload perform better while using SSD. This feature only makes sense for hosting providers who cram hundreds or thousands of customers on a single SQL Server with an underpowered SAN.

Hello Jeremiah

Firstly apologies for addressing my comment to Brent, an honest mistake.

Just to clarify my question.

SQL Server 2014 Standard Edition has a memory limit of 128GB.

I was trying to understand whether using a RAMDisk to artificially extend SQL Server’s memory allowance by targeting the Buffer Pool Extension at a RAMDisk could act as an imperfect workaround to this restriction?

@Jorg “I had a database on SSD and I ran tests with and without BPE. I used HammerDB for the tests. It turned out that the system is even a bit slower with BPE.”

@Jeremiah “…PCI-Express SSD vendors couldn’t make a realistically sized SQL Server workload perform better while using SSD. This feature only makes sense for hosting providers who cram hundreds or thousands of customers on a single SQL Server with an underpowered SAN.”

@Jorg your results are interesting, what is hard to easily comprehend however is that it is “no big surprise” that it performs slightly worse. I admit to being slightly surprised. In what sense is it easy to see that using BPE would have a detrimental performance impact with a database already on SSDs?

@Jeremiah – are you saying that a buffer pool extension targeting an SSD only makes sense where your database resides on slower storage?

Could there not be any indirect benefits?

I think BPE only makes sense for very niche workloads like I outlined in my reply to Jörg. Otherwise, use your fast storage for your data, not for buffer pool extensions.

@Ganesh: Well, it’s the same speed reading a page from database file or from BPE file when both are on SSD. But there is a overhead of writing the page into the BPE file. That is why it is a bit slower with BPE.

(This is my opinion only, no quote from docs.)

Why steal RAM from SQL Server for allocating it to a RAM disk for BPE? Well, simple think about Standard Edition limitation of 128 GB…

I suppose that there are many situations where this artificial limitations is the main reason for people to buy the far more expensive Enterprise Edition. The RAM disk idea could circumvent this Limitation, right?

Give that a shot and let us know how it works out for you.

Hi guys,

I’ve a sql 2014 EE CU6 with 50GB of SQL Server MAXServerMemory and 190GB of Buffer Pool Extension on an SSD LUN exposed by SAN, I experience a very strange behaviour:

After some days of usage the performances of the server slows down, for example I’ve an huge job that usually completes in 4.000 secs after a week is completes in 9.000 or more secs but the very strange thing is that at “idle” I mean with very few batch requestes/sec, almost 0 MB/sec of I/O, I have the CPU of the server at a costant level of 25%….

If I restart the istance of SQL Server, CPU usage return to normality and my job completes in 4.000 secs.

I’ve opened a case with Microsoft GBS they are trying to understand, in the meanwhile I’ve discovered that there is some “bug” in the BPE: withoust restarting my instance, if I simply disable and re-enable BPE, the performances of the server are optimal and the “strange CPU usage at idle” does not occurs.

I’ve noticed that the problem arises when the buffer pool extension is almost filled (and in my environment it starts after some days of usage), by now I’ve adopted a very “dirty” workaround, disabling and re-enabling BPE every night when the server has few load.

Andrea – you’re doing the right thing by opening a case with Microsoft. This is pretty far beyond what we can do in a blog comment. Good luck with the case, though!

Just generally speaking, it doesn’t make sense to use BPE hosted on SAN-based SSDs. Those are the world’s most expensive solid state drives. You’re better off using commodity SSDs in the server itself.

Hi Brent,

thank you for your answer, I use SSD on SAN coz my instance is clustered (May I use local storage for BPE? idk).

The servers forming the cluster are virtual machine and the hosts where VM are running on have no local storage (they boot from SAN) so the only solution (if I can use local storage for BPE) is to buy PCI-E SSD on our hosts (blade servers), and they are expensive more the SSD “disks” on SAN.

Andrea – yes, you can use local storage for BPE. If you’re using VMs on blades, BPE on the SAN isn’t really a great solution – you’re better off increasing the RAM in the guest.

I’m a little confused by the testing and conclusions, it seems like you are putting a server with a proper spec of ram against one with an improper ram spec + BPE after which you say, “nanny-nanny-boo-boo ram won again,” then sticking your tongue out and running home. Wouldn’t a purpose of BPE be for a server with lots of memory + 1 or more big databases to help ease the pressure? It seems like a more productive test would be a (maxed) server with 512GB of ram, a 1-1.5TB database, and a unused 800GB SSD or 2. Then see how much *better* it does with BPE vs. no BPE, instead of how much worse it is with crippled ram.

Forgot to specify: the 1 – 1.5TB database is on a SAN… (cue prepared piano)…

Microsoft’s advice is that the Buffer Pool Extension be at least 4 times the size of the current buffer pool. If I have a server with 512GB of memory, 2TB of BPE stored on PCI-Express SSD, and 1.5TB of data on the SAN, I should probably be fired for not using the PCI-Express SSD for storage.

Based on Microsoft’s recommendations, BPE is designed for precisely the situation you deride.

BPE is delightfully single threaded and it only retrieves one page at a time. For large systems it becomes an even larger bottleneck that what we demonstrate here.

Thanks much for the link, very helpful. In my, (unfortunately real world example), there is no danger in getting sacked because the CIO has already decreed that all databases have to live on the SAN. Single threaded- eek. That is news to me, but it seems like it would still be faster than the storage for large datasets. Going to have to think about how to test that one…

Kyle – if you’re talking about large datasets, that’s exactly where the single-IO-queue, one page at a time is even WORSE. Definitely test that – it’s easy to see the performance problem by looking at the disk queue length and average IO size counters.

Terrific insight as always guys! Probably saved me some useless wheel spinning. I find it interesting that very little is mentioned of BPE being single threaded elsewhere. Oh well we can always hope for multithreading in a future version…

May be worthwhile trying this again with an Optane SSD once they come out this year.

VladG – the root of the problem is that BPE’s writes are single-threaded, and one 8K page at a time. No storage device is going to succeed under those parameters.

Brent, I believe you meant “reads” are single threaded. That is what I had thought too until I found this blog by Ramesh Meyyappan (http://blog.sqlworkshops.com/slow-performance-due-to-sql-server-2014-buffer-pool-extension-bpe-and-serial-queries-maxdop-1/) where he shows that it’s only single-threaded if your query is serial. A parallel query will use multiple threads (as many as the DOP) to read from BPE.

Besides, Optane is not your average storage device. It’s based on a new class of non-volatile memory that is closer to DRAM in terms of latency and throughput than it is to flash (https://newsroom.intel.com/press-kits/introducing-intel-optane-technology-bringing-3d-xpoint-memory-to-storage-and-memory-products/). Intel is promising ~10?s latency for ‘small reads’ over NVMe (not sure what they mean by ‘small’ here, though).

This could be a killer combination for those of us who try to avoid the costs of the enterprise edition.

VladG – right, but read the entirety of Ramesh’s blog. It’s still single-threaded IO – just done with one 8KB page request per worker. If your query hits MAXDOP 8, that’s only 8 requests for a single 8KB page sent at a time. Enterprise’s read-ahead will blow that out of the water even on crappy storage.

Best of luck with the project, though! I’d love to read a post about what you find.

I got 2 questions: 1) Is BPE in SQL2016 still single-threaded, as Brent and Jeremiah wrote regarding SQL 2014?

And 2) if I had server with 128GB RAM with a single instance of SQL 2016 standard (which should support BPE), would the BPE be able to overcome the standard edition’s limit of 128GB RAM ? Or is the MaxServerMemory + BPE limited to 128GB?

Thanks for help!

Step:

1 – check Books Online to see if there are any improvements. I haven’t heard of any, but I haven’t looked.

2 – BPE is above and beyond max server memory, but remember that it’s not really memory. It’s disk.

2: Interesting information – that’s exactly what I wanted to know!

Can someone provide an official documentation link for this? Cannot find anything at BOL…

Additional question:

SQL 2016 Standard Edition, maximum_server_memory=128 GB

When I add 4*128 GB BPE, do I have to change the maximum_server_memory value to 5*128 GB ?

Thank you!

Matthias, you don’t need to change the maximum server memory because BPE are not memory, they are disk. You are still limited to SE max memory of 128GB.

Hi,

Let us know, Shall we configure BPE in shared storage?

Does SQL Server expect the BPE to be persisted between server restarts? If it’s just acting as an extension of RAM I wouldn’t expect it to, but I don’t want any nasty surprises.

We’re using AWS and the local SSD is wiped between server restarts.

Zac – the easy answer there would be to test it, right?

“The buffer pool extension file is deleted upon shutdown of the instance of SQL Server”

Source: https://msdn.microsoft.com/en-us/library/dn133176%28v=sql.120%29.aspx#Return Information about the Buffer Pool Extension

Sou you can BPE even on a Cluster (with SSDs on all nodes)

Hi Brent,

What is the best practice for the drive allocation unit for the BPE ? (is it OK to leave it as a 4K – default)

* I have one SSD drive which I would like to use for TempDB and BPE , so in that case I don’t know if set the allocation unit to 64K (for tempdb) or just leave it as 4K ??

Thanks ,

Hi Jeremiah, Nice article. You referred to this link in your page for an ‘Amazon i2.8xlarge instance’, however I am unable to find this specification on that page. Do you know how much initial RAM you started with?

http://aws.amazon.com/ec2/instance-types/#Instance_Type_Details

Hi Paul, Jeremiah doesn’t work here anymore, and this article is pretty old. Perils of the cloud! You’ll want to use whatever the current generation of machines is.

Thanks!

I am considering rolling this feature out to our azure vm (iaas) 4 node availability group. Ideally, I would like to take advantage of the volatile SSD that comes with the VM. Currently, I use this for tempdb and have a task that creates a folder if the server ever restarts so tempdb is happy when sql server starts back up.

I was wondering if I could drop the BPE on the volatile SSD that is wiped out every time the server is rebooted? Does BPE have any issues with volatile storage?

https://cloudblogs.microsoft.com/sqlserver/2014/09/25/using-ssds-in-azure-vms-to-store-sql-server-tempdb-and-buffer-pool-extensions/

I done went and googled.

[…] Cette technique n’est pas aussi rapide que d’avoir assez mémoire, mais cela peut vous donner une augmentation modeste du débit lorsque votre disponibilité la mémoire est basse. Vous pouvez en savoir plus sur les extensions de pool de mémoire tampon et examiner certaines analyse comparative des résultats sur Brent Ozar site. […]

There is a problem. We have scheduled the turning off BPE (for external nightly maintenance job). And SQL server started to hang with memory allocating errors in logs every second night. BOL doesn’t say anything about mandatory instance reset after turning off the BPE. And we also don’t want it to be reset.

It’s unclear why it can’t handle with changing of BPE without reset, while it contains only the “clean pages”? Even size of available RAM can be recofigured online without the issues.

Alexander – for support questions, you’ll need to contact Microsoft.