Cancer research is one of the last areas of human endeavor in which anyone would want to "cut corners." Yet, that’s exactly what scientists must often do when the computational resources available to them aren’t sufficient to handle all the components of experiments they want to run.

That is the problem described by Ramy Farid, president of a New York firm called Schrödinger that makes simulation software for use in pharmaceutical and biotechnology research. The company operates a 1,500-core cluster to perform research, but it’s often not enough.

"We cut all kinds of corners. We use less accurate scoring functions. We do less sampling of the conformations of a compound. We are always having to make decisions like that," Farid, who earned a PH.D. in chemistry from Caltech in 1991, told Ars.

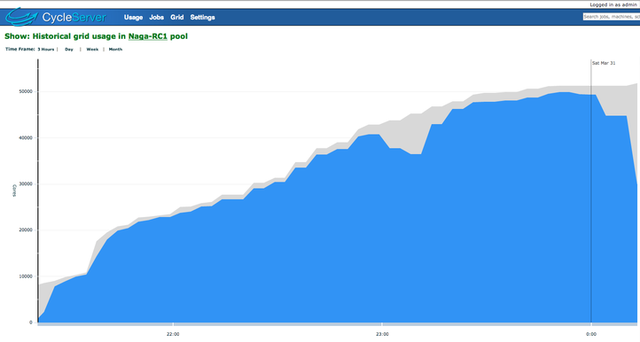

Farid and his team recently decided they wanted to stop cutting corners, specifically for a joint cancer research project conducted with Nimbus Discovery, which does computer-based drug discovery. The key was that instead of using Schrödinger’s internal cluster, they opted to build a 50,000-core supercomputer on the Amazon Elastic Compute Cloud.

It ran for three hours on the night of March 30, at a cost of $4,828.85 per hour. Getting up to 51,132 cores required spinning up 6,742 Amazon EC2 instances running CentOS Linux. This virtual supercomputer spanned the globe, tapping data centers in four continents and every available Amazon region, from Tokyo, Singapore, and Sao Paolo, to Ireland, Virginia, Oregon, and California. As impressive as it sounds, such a cluster can be spun up by anyone with the proper expertise, without talking to a single employee of Amazon.

Data turns up potential leads for cancer treatment

Schrödinger’s cluster was deployed by Cycle Computing, which builds software designed to take the raw computing power offered by Amazon and turn it into what Cycle CEO Jason Stowe likes to call a "utility supercomputer." Cycle takes care of things like data routing, error handling, and various types of automation to take Amazon’s virtual servers and turn them into a "functioning computing environment that doesn’t require you to rewrite your applications because it looks like an internal high-performance computing system," Stowe said.

The cluster proved to be a success, turning up leads for future research that Schrödinger would have missed had it been forced to cut corners by using its more limited in-house resources.

Schrödinger tested 21 million synthetic compounds developed by chemistry labs that can potentially be used in drugs. While the full test was run on Amazon, Farid and his team decided to run the same tests, with corners cut, internally, to see how the results would compare.

"When we do a virtual screen on our in-house cluster, we have to cut some corners because we can’t wait a year for the screen to finish," Farid said.

Farid explained that his team was looking at "pharmaceutical drug targets, proteins in this case, with a drug or drug candidate, often referred to as a 'ligand,' bound to the protein. What we did on the Amazon cluster was to search, using 'virtual screening,' for ligands that are predicted to bind to a particular target that has been implicated in several different cancers." You can click here for some cool images of the compounds studied.

As it turned out, there were false negatives produced by the internal cluster, which were correctly identified as potential drug targets by the Amazon cluster. Cutting corners can also result in false positives. With the results from the Amazon cluster, Farid said Schrödinger is going to purchase compounds for further testing that it otherwise would have missed.

This is just the first step in an iterative process that can take many years. There’s no guarantee that any cancer medication will be developed as a result, but at least there’s a chance.

Using a sports analogy, Farid said tapping the huge resources of Amazon is like being able to take many more shots on goal. By Stowe’s way of thinking, the utility supercomputer lets researchers ask the right questions without fear that they won’t have the computational wits to answer them.

Scaling up to 50,000 cores

This isn’t the first cluster Cycle Computing has built on Amazon, but it is the largest. We reported last September that Cycle built a 30,000-core cluster for $1,279 per hour for an unnamed pharmaceutical customer.

The 51,132-core cluster didn’t start that large—it scaled up steadily, hitting its peak somewhere in the third hour. The cluster used 58.78TB of RAM, and was secured with HTTPS, SSH, and 256-bit AES encryption. Amazon is a bit secretive of what’s running on its infrastructure, but Stowe said the cluster likely accessed servers based on Intel’s Nehalem and Sandy Bridge processors.

The cluster used a mix of 10 Gigabit Ethernet and 1 Gigabit Ethernet interconnects. However, the workload was what’s often known as "embarrassingly parallel," meaning that the calculations are independent of each other. As such, the speed of the interconnect didn’t really matter.

The $4,800 per hour cost is what was paid to Amazon. In addition, Schrödinger pays Cycle for a subscription service. But building the kind of data center that could house a 50,000-core cluster could cost $20 million to $25 million, Stowe said.

Certainly, Amazon isn’t the only company to offer HPC services on demand. Supercomputing centers sell time to researchers, but there can often be a significant wait before resources may be accessed, Stowe said.

With Amazon, "we can provision this environment dynamically without having to interact with people or do any form of manual labor," Stowe said.

In future supercomputing runs, Cycle may take advantage of Amazon’s "spot instances," which let customers bid on unused capacity and can lower the price. But for the 50,000-core run, the challenge was ensuring that Cycle’s customer could get all the capacity it needed. That’s why it was run across multiple continents.

Stowe is always trying to one-up Cycle’s past achievements, but topping 50,000 cores will take some careful planning.

"We knew that we could hit something in this range," Stowe said. "But in order to acquire this, we had to be ready to grab infrastructure wherever we may find it."

reader comments

23