Introduction

Most high-level programming languages are able to evaluate boolean expressions using an optimization called short-circuiting, which can stop evaluating an expression as soon as the result can be determined.

For instance, we could have an expression such as:

if((a == b) &&(b == c))

result = true;

In this case, the AND operator (&&) can stop evaluating the expression as soon as it finds that a is not equal to b, because the second part of the expression (b == c) cannot affect the overall result (the AND operator only evaluates to true if both sides evaluate to true).

The same works for the OR (||) operator:

if((a == b) ||(b == c))

result = true;

The evaluation can stop as soon as the first part of the expression is evaluated if a equals b.

You can take advantage of this feature to optimize your code, putting expressions with a lower evaluation cost (or that are more likely to lead to short circuiting) in the left side of the operator. It can also help the developer to avoid error conditions, coding expressions in a way that short circuits the problematic part.

Looks like a cool feature, but the pieces of code above work in languages such as C#, C++ or Java. We are SQL dudes; can we take advantage of short-circuiting in T-SQL?

It depends. This feature relies on the evaluation order of expressions, which is always left to right for procedural programming languages.

Procedural versus Declarative

Procedural languages explicitly define the algorithms to achieve the goals of the program, while declarative languages define just what results are required, leaving the task of finding the appropriate algorithm to the underlying software system.

T-SQL is a declarative language; it specifies the data needed from the database, but not the details of the extraction process itself. That means there is no first and no second in expression evaluation: the database engine is free to evaluate the expressions in any order it finds appropriate, regardless of whether they are on the left or right side of the boolean operator.

This does not only mean that the expressions will be interpreted in a non-predictable order, it also means that the engine could choose not to use short-circuiting, whatever order it decides to pick to evaluate the expressions.

However, T-SQL is not a purely declarative language, it is a SQL dialect that has evolved from the standard with the introduction of procedural constructs, user-defined types and many other proprietary extensions. The constructs that allow T-SQL to perform loops and branches are known as control-of-flow language.

To introduce short-circuiting in SQL Server, I will first examine the way it handles this feature for procedural constructs.

Short-Circuiting for Control-of-Flow Language

The main constructs that expect and evaluate a boolean expression in control-of-flow language are IF and WHILE. Since these both interpret expressions in the same way, I will explore short-circuiting behaviour just for the IF statement, leaving the reader to verify that WHILE works in the same way.

The first question to answer is, “does the IF statement short-circuit expressions?” The answer is definitely yes. Let’s try some statements with demonstrative theoretical values to verify this behaves as expected:

IF 1/0 = 1 AND 1 = 1

SELECT 'True'AS result

ELSE

SELECT 'False'AS result

When we execute this statement we get an exception:

Msg 8134, Level 16, State 1, Line 1

Divide by zero error encountered.

The reason for this is simple: the engine evaluated the expression on the left side of the AND operator and suddenly got a divide by zero exception. When XACT_ABORT is set to ON, the batch is terminated, otherwise the row holding “False” is returned.

Ok, so we just have to reverse the order of the two expressions and change the one on the left side slightly to let short-circuiting happen:

IF 1 = 0 AND 1/0 = 1

SELECT 'True' AS result

ELSE

SELECT 'False' AS result

This is what we get when this statement is executed:

result ------ False

SQL Server interpreted the IF statement and found that the expression on the left part of the AND operator is false, so it skipped the evaluation of the right part, because it would not have changed the value of the whole expression.

Now we just have to verify that the same behaviour applies to the OR operator:

IF 1 = 1 OR 1/0 = 1

SELECT 'True' AS result

ELSE

SELECT 'False' AS result

result ------ True

So far we can say that the IF statement behaves in the same way both in T-SQL and in procedural languages such as C# and Java. But if we push our tests a bit further, we can easily find a small but significant difference. Consider this statement:

IF 1/0 = 1 AND 1 = 0

SELECT 'True' AS result

ELSE

SELECT 'False' AS result

We would expect this to raise error, but, surprisingly enough, it returns a result:

result ------ False

The explanation is that the engine does not interpret expressions strictly left to right as it does in procedural languages. The query optimizer is able to analyze the statement to identify expressions that are evidently false and mark one of the sub trees of the IF statement execution plan as “dead,” without having to interpret impossible expressions at run time.

This optimizer feature is known as “contradiction detection” and is performed on all expressions that can be evaluated at compile time, such as those that contain constant values.

Let’s see what happens when working with variables:

DECLARE @a int = 1

DECLARE @b int = 0

IF 1/0 = 1 AND @a = @b

SELECT 'True'AS result

ELSE

SELECT 'False'AS result

Msg 8134, Level 16, State 1, Line 5

Divide by zero error encountered.

The main difference here is that the contradiction happens at run time and cannot be detected by the query processor and no “reverse order short-circuit” can be applied. However, what we have discovered so far is that T-SQL control-of-flow language does perform short-circuiting, but with some differences from that found in a procedural language.

Short-Circuiting for Data Manipulation Language

Let’s take a look at short-circuiting for WHERE clauses in DML queries. We will need a temporary table to perform our tests:

IF OBJECT_ID('tempdb..#Test')IS NOT NULLDROP TABLE #Test

CREATE TABLE #Test (

a INT NOTNULL PRIMARY KEYCLUSTERED,

b VARCHAR(128) NULL,

c INT NULL

)

INSERT INTO #Test

SELECT TOP (10000)

ROW_NUMBER()OVER (ORDERBY sc1.id)AS a,

CASE

WHEN ROW_NUMBER()OVER (ORDERBY sc1.id)% 5 = 0 THEN sc2.name

ELSE CAST(sc1.colid AS VARCHAR(128))

END AS b,

sc2.colid AS c

FROM master.dbo.syscolumns sc1,

master.dbo.syscolumns sc2;

This code creates a 10,000-row table with 2 integer columns (a and c) and a varchar column that stores some numeric and some non-numeric values.

SELECT * FROM #Test WHERE b < 10;

Msg 245, Level 16, State 1, Line 1

Conversion failed when converting the nvarchar value 'audit_spec_id' to data type int.

If we try to convert all the values in column b into an integer, we get an error. We get the same error even if we add another condition to the expression to only consider rows with numeric values in column b:

SELECT * FROM #Test WHERE b < 10 AND ISNUMERIC(b)= 1;

Msg 245, Level 16, State 1, Line 1

Conversion failed when converting the nvarchar value 'audit_spec_id' to data type int.

The first thing to test is whether short-circuiting can happen or not. Reversing the order of the expression makes the difference:

SELECT TOP 5 * FROM #Test WHERE ISNUMERIC(b)= 1 AND b < 10

a b c ----------- ---------- ----------- 1 1 5 2 1 6 3 1 5 4 1 3 6 1 1 (5 row(s) affected)

This prevents the expression from raising the error, so we can say without any doubt that short-circuiting can also happen during WHERE clause evaluation.

What we really have to ask ourselves is how this actually works and how much we can rely on the short-circuiting behaviour. Will the evaluation order always depend on the order of the filters in the WHERE clause? Will the engine always evaluate expressions from left to right or will it choose different evaluation orders when other elements have to be taken into account?

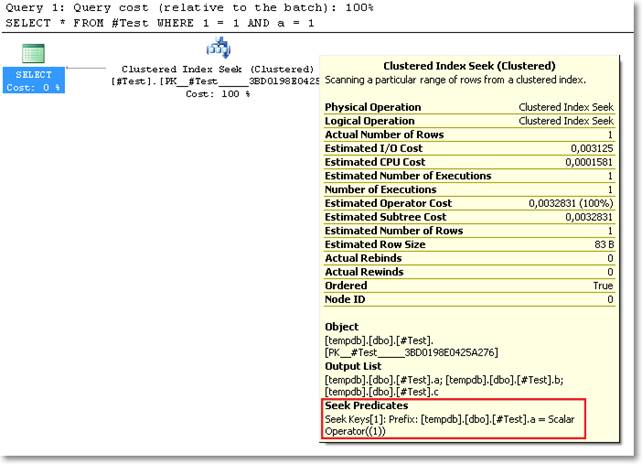

In the above queries, the evaluation order is left to right because the query optimizer decided to perform a clustered index scan and evaluate each predicate while scanning. If we add another condition that makes the optimizer decide for a different plan, things change a lot:

SELECT * FROM #Test WHERE b < 10 AND ISNUMERIC(b)= 1 AND a = 1

a b c ----------- ---------- ----------- 1 1 5

Since all rows that match the predicate “a = 1” contain numeric values in column b, no matter how we change the order of the conditions, the query cannot fail, because the optimizer will always choose a plan that first seeks for a = 1 and evaluates the other conditions later.

This shows SQL behaving in a declarative manner as expected. No assumptions can be made about the algorithm that will be chosen to extract the data based on the syntax of the request. The only things that can influence the choice of execution plan are indexes statistics and constraints, not the order the conditions are encountered in the command.

It is not possible to predict whether the optimizer will choose to short-circuit or not. If it decides that evaluating the conditions one at a time is more convenient, no short-circuiting will happen.

Let’s try adding a new index and consider this simple query:

CREATE NONCLUSTERED INDEX IX_ColumnC ON #Test (c);

SELECT T1.*

FROM #Test AS T1

INNER JOIN #Test AS T2

ON T1.a = T2.c

WHERE T1.a = 1 AND T2.c = 1;

The execution plan uses two index seeks:

Two independent seeks can only mean that each condition on the constant filter values is evaluated separately and then the resulting rows are joined later. No short-circuiting can happen here.

Tautological expressions

The word tautology was used by the ancient Greeks to describe a statement that was true in every possible interpretation merely by virtue of saying the same thing twice. For instance, “tables are tables” is a tautological statement.

Tautological expressions are always true, so that they can safely be short-circuited without changing the meaning of the overall statement. For instance, filtering with the predicate “1 = 1” will return all rows, without evaluating the condition at all.

Consider the following query:

SELECT * FROM #Test WHERE 1 = 1 AND a= 1

As you can see from the execution plan, the seek predicate does not contain “1 = 1”, as it was stripped away by contradiction detection.

When the tautological expression is combined with other expressions using the OR operator, no filter is applied to the target table and all rows are returned:

SELECT * FROM #Test WHERE 1 = 1 OR a= 1

Moreover, when the tautological expression is negated, we get a contradiction. The target table does not even get queried, and a constant scan is performed:

SELECT * FROM #Test WHERE NOT (1 = 1)

Getting back to the short-circuiting topic, we might be tempted to take advantage of SQL Server’s ability to identify tautological expressions and build “catch-all” queries to let the engine strip away tautological expressions and contradictions.

Typically, a catch-all query is used inside a stored procedure to filter data only on parameters that are passed with a value different from NULL, using an expression such as:

SELECT * FROM #Test WHERE a =ISNULL(@parameterA,a);

The idea behind this code is making the database engine return all rows when @parameterA contains NULL, as if the query was written this way:

SELECT * FROM #Temp WHERE a =a

Gail Shaw (http://sqlinthewild.co.za/index.php/2009/03/19/catch-all-queries/ ) and Erland Sommarskog (http://www.sommarskog.se/dyn-search-2005.html ) describe in depth how to handle this kind of query and I suggest that you read these great articles.

If the one above was a tautological expression, it could be short-circuited safely, as we have seen in our previous examples involving constants. Unfortunately, NULL values have to be taken into account.

Let’s try and see what happens:

SELECT COUNT(*) FROM #Test WHERE c =c OR b= 1

count

-----------

10000

The first part of the expression is always true, so there is no need to evaluate the second part, that would fail if evaluated (since we have got some non numeric values in column b). In this particular situation, the internal short-circuiting prevents the query from failing, but how reliable is this behaviour?

Looking at the execution plan, we can see that the expression “c = c” is not considered tautological at all and the contradiction detection feature does not remove the expression from the scan predicate.

This means that the query processor evaluates the expression “c = c” and it doesn’t fail just because the expression is actually true, not by definition. Does this mean the ancient Greeks were wrong? Not exactly: tautology means saying the same thing twice and, in the three-valued boolean logic of T-SQL, NULL is not the same thing as anything.

This behaviour can be easily verified updating some rows and repeating the test:

--Put some NULL values in column c UPDATE TOP(10) #Test SET c = NULL --Repeat the test SELECT COUNT(*) FROM #Test WHERE c = c OR b = 1

Msg 245, Level 16, State 1, Line 1

Conversion failed when converting the varchar value 'audit_spec_id' to data type int.

When column b stores NULL values, the engine evaluates the first part of the expression (c = c), finds it false and then has to evaluate the second part (b = 1), that fails.

Short-Circuiting in Common DML Expressions

CASE

Case is the SQL equivalent to “switch” or “Select Case” statements in procedural languages. We have seen that relying on the behaviour of looping and branching statements in procedural languages is not a good starting point to predict their behaviour in T-SQL.

The CASE expression, fortunately, does not bring any new surprises. Seeing is believing, so, let’s put a quick test together:

We already have a test table in place with some non-numeric values stored in a char column, so that we could try to select out all the rows with numeric values, but just those with a value less than 1000. The code is straightforward, but, unfortunately, not guaranteed to work.

SELECT b

FROM (

SELECT b

FROM #Test

WHERE ISNUMERIC(b)= 1

) AS data

WHERE CAST(b ASINT) <= 1000;

When run against a SQL Server 2000, no error is thrown, but SQL Server 2005 and 2008 implement an optimization to push non-SARGable predicates into the index scan from the subquery which causes the statement to fail.

To avoid this issue, the query can be rewritten incorporating a CASE expression, maybe a bit obscure, but guaranteed not to fail:

SELECT b

FROM (

SELECT b

FROM #Test

) AS data

WHERE 1 =

CASE ISNUMERIC(b)

WHEN 1 THEN

CASE

WHEN CAST(b AS INT)<= 1000 THEN 1

ELSE 0

END

WHEN 0 THEN 0

END

The short-circuiting behaviour is clearly guaranteed and documented by Microsoft, as reported on Books Online: http://msdn.microsoft.com/en-us/library/ms181765.aspx

- Evaluates input_expression, and then in the order specified, evaluates input_expression = when_expression for each WHEN clause.

- Returns the result_expression of the first input_expression = when_expression that evaluates to TRUE.

IN

What do IN predicates have to do with short-circuiting? When used to test a list of values, they can be rewritten as a series of OR expressions.

Let’s build a simple query against our test table to investigate this behaviour:

SELECT * FROM #Test WHERE c IN (1,10,20,30)

If we take a look at the execution plan, we will see that the predicate has been changed as if the original query was written this way:

SELECT * FROM #Test WHERE c = 1 OR c = 10 OR c = 20 OR c = 30

Keeping in mind how short-circuiting works for the OR operator, we might expect queries to run faster if we could instruct the query optimizer to look first for values more likely to be found in the column, in order to keep the number of tests as low as possible. For instance, if the value stored in the column was the same for all the rows and it was the first to appear in the list of values to look for, only one test for row would have to be performed, with a significant time saving. In other words, looking back at the test table, does it make any difference issuing the above query reversing the order of the literals inside the IN value list? The answer is just one more test away:

SELECT * FROM #Test WHERE c IN (30,20,10,1)

As you can see from the execution plan, the order of values in the list does not change the scan predicate. In this case, the query processor decided to spool and sort the literals inside the IN predicate, before applying them. This means that, once again, we can say that some short-circuiting takes place, but we have no control over the evaluation order.

To ensure that values are tested in a predictable order, we could rewrite the query using a CASE expression, but do not expect performance miracles; it is not the number of comparisons that makes the difference, it’s the query plan, instead, that determines how fast a query will execute.

If you do not believe it is so, you could take a look at the FizzBuzz problem solved here. Cutting down by 15% the number of calculations performed for each row saves just one or two seconds over a 160 seconds total execution time, when working with 1 billion rows! It is generally nothing worth worrying about.

Short-circuit myths

There are plenty of short-circuit myths around in forums and blogs: I’ll try to enumerate and verify the most common ones:

- SQL Server does not short-circuit expressions

This article demonstrates that SQL Server does sometimes short-circuit expressions, but the behaviour is not documented (aside from CASE) so we cannot rely on it.

- Short-circuit applies only to OR expressions

It is false and very easy to verify. Any boolean expression is capable of being short-circuited, in the right circumstances.

- SQL Server does not short-circuit expressions that contain nondeterministic functions

Once again very easy to verify:

DECLARE @a INT = 1; IF @a = 1 OR CAST(NEWID()AS VARCHAR(50))= 0 PRINT 'Another myth';

NEWID is a nondeterministic function that returns a GUID, which cannot be converted to an integer. Because of short-circuiting, no error occurs.

I am citing this one because it comes from one of the first Google entries for “short-circuit Sql Server” (Mark Cohen’s blog).

Conclusion

We have seen that SQL Server is sometimes able to short-circuit expressions, but, aside from CASE expressions, we have no control over this feature. The purpose of short-circuiting in procedural languages is avoiding problematic expressions and reduce execution times. In T-SQL, none of these goals can be achieved by means of short-circuiting expressions.

Relying on this feature to prevent the evaluation of problematic expressions often means entrusting your code to an undocumented implementation detail that may change at any time, while trying to optimize a query by cutting down the number of expression evaluations has a negligible effect on performance compared to tuning the execution plan.