Introduction

Hi again! For this somewhat lengthy article, I would like to illustrate a two ways to execute data loading across multiple instances of SQL Server to load data to a central repository. One method will connect to each instance serially and all steps will be contained in a single SSIS package. The second method will leverage Powershell and its Job feature to kick off the execution of an SSIS package across multiple instances without having to wait for the package to complete on one instance before starting the next. Loading data from all of your instances into a single data source is one real world situation where you may benefit from using either of these methods. I personally like to pull database configuration, backup, and instance-level information from my instances on a regular basis.

Background

I started working for my current company when we had approximately 40 instances in inventory, and that has grown to well over 1000 (consolidation of business units, company growth, etc). As I automated processes, I used the first method to serially connect and collect data from our instances. As the inventory has grown, the linear approach to loading data from all of our instances (database info, backup status, etc) has turned a 10 minute data loading process into upwards of 3 hours.

Below, I will show one way to linearly collect data through a foreach loop in SSIS, and a method to take the “foreach” loop contents out of the package and execute the loop via Powershell Invoke-Command commands with the AsJob parameter. SSIS cannot run a foreach container in a parallel loop task, and while there are products on the market that can do this, we always like to have a free option to leverage.

For purposes of the article, the databases leveraged will be minimalistic and the SSIS packages will also be simple in nature (no error handling, for example).

Both methods will do the following basic ETL tasks:

- Truncate the destination table

- Load a list of databases from each instance in inventory to a central database_information table.

The Inventory

Both methods will pull from a list of instances in the DEMO_DBAInventory database and load the data into the DEMO_DBARepository database. The layout looks like this:

Databases

DEMO_DBAInventory

Dbo.instances – contains inventory of instances to loop through

Instance_name varchar(255)

DEMO_DBARepository

Dbo.database_information (truncated and refreshed by the SSIS packages)

Instance_name varchar(255)

Database_name varchar(255)

Inventory Assumptions

The account that you are going to configure within the SSIS package’s connection manager under will need to have permissions to each instance to be able to run the queries that you are issuing. Therefore, if only pulling data from the system DMV’s, maybe only VIEW SERVER STATE is required, or if creating a database, db_creator rights, etc. Basically, the mechanism will be through the SSIS package, but the permissions must be sufficient for the operation to succeed.

The Methods

For both methods shown below, the goal will be to pull in a list of databases from each instance that we have in inventory using an SSIS data flow task that executes “Select @@servername, [name] FROM sys.databases”.

First Method – ForEach Loop within SSIS Package

This method works by having a few variables defined and taking a query of a list of instances to process and processing them using the ForEach component on the control flow, and the ForEach changes the connection dynamically, and then executes the data flow task.

The control flow for this looks like this:

To run this as a SQL Agent job, the job stop would look like this:

I’ll break down the Control Flow, item-by-item, so that you can see how the package is built and how the framework can be leveraged.

Truncate DBARpository DB Tables

The Truncate is a simple Execute SQL task with no result set, running against the repository database. In this case, this is the only pre-load step to be completed before the data is refreshed.

Package Variables

There are two variables used. Instance_list is an object that will be populated with a “full result set” of instances that will be looped through. Instance_name will be set “on the fly” as the loop progresses.

Pull Instance List from Inventory

The “Pull Instance List from Inventory” execute SQL task will load the list into the instance_list object variable. 0 as the Result Name is something that just works to populate the object type.

Loop through each instance

The object instance_list is used in the foreach ADO enumerator, and the only column that exists in the result set is “instance_name”, and that is at index 0 of the object. As such, as the loop progresses, each record from index 0 will load into the instance_name variable.

Set instance_connection properties

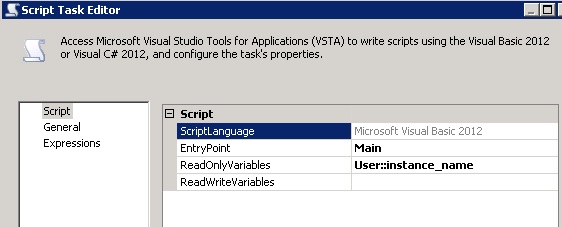

Here are the settings.

The code for the Main Sub of the script task looks like this:

'Pull in all connection managers

Dim conns As Connections = Dts.Connections

'Set connection manager ServerName property for the Instance_Connection connection manager

Dim cmInstance_Connection As ConnectionManager = conns("Instance_Connection")

cmInstance_Connection.Properties("ServerName").SetValue(cmInstance_Connection, Dts.Variables("User::instance_name").Value.ToString())We want to read the instance_name variable from this script task, so we set that accordingly, and then we set the connection property values from the VB.Net code.

Pull Database List

Here is the flow.

OLE DB Source: Instance_Connection database_information query

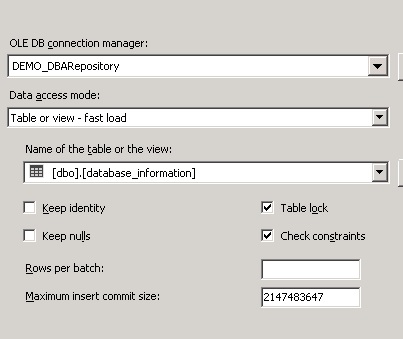

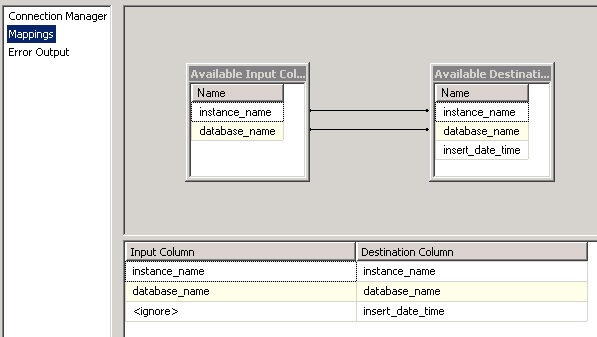

OLE DB Destination: DBARepository database_information

The destination is set to the repository database and the column mappings are set accordingly.

Pros to this method:

- Just one simplified job step on a SQL Agent Job to run the pre-load steps, the foreach contents, and post-load steps since they can all be contained within the package

- Likely more familiar than powershell on looping through connections

- Least amount of processing power needed since only one instance of the SSIS package will ever be getting executed at once.

- Error handling can be added to account for all tasks in the package

Cons to this method:

- Linear, data loading / foreach contents must complete on one instance before proceeding to the next.

- Somewhat advanced SSIS techniques using objects, full result sets, variable mapping for the foreach component, and dynamically setting connection manager properties

- Failure of one instance can halt the package (additional considerations for error handling and making sure package continues through the loop if one instance fails)

Second Method – SSIS package called by Powershell Jobs

This method works by taking the contents of the ForEach loop in the first method and placing them “on their own” in the package. The “pre-load” and “post-load” (steps before and after the foreach loop from method 1, steps would go in separate job steps, and instance name gets passed from a foreach loop in a powershell script. Powershell jobs are used to kick off multiple package calls without waiting for each instance’s execution to complete.

The control flow looks like this:

The SSIS package here is simply designed to run against one instance, whatever is loaded within the SSIS variable named “instance_name”. The script task and the data flow task are the same as they are in method 1, but obviously stripped from the foreach container.

The variables for the method 2 package look like this:

To run this package against a list of instances, we’ll turn to using Powershell. The SQL Agent job and job steps would look like this:

The Powershell (v2) script is framed like this:

- Query instance list

- For each instance in the list

- Create the command to execute the SSIS package and pass the instance name to the package

- Execute the command via Powershell job.

The Powershell script is shown below:

#S.Kusen Feb 6 2015

#

#This powershell script shows a way to loop through a list of instances pulled from a database table and execute an SSIS package against those instances.

#The example uses a file path to a .dtsx file. Search dtexec in BOL for example on how to execute from packages stored within MSDB.

#

#For this example to work, you'll need to do the following updates to the script:

#1. set the $dbaadminserver value to the name of your SQL Server where the DEMO_DBAInventory exists.

#2. Verify the path to dtexec. The $scriptcommand is set to C:\Program Files\Microsoft SQL Server\110\DTS\Binn\dtexec by default. Your path may be different.

#

Add-PSSnapin SqlServerProviderSnapin110 -ErrorAction SilentlyContinue -WarningAction SilentlyContinue #SQL 2008 / 2008 R2

Add-PSSnapin SqlServerCmdletSnapin110 -ErrorAction SilentlyContinue -WarningAction SilentlyContinue #SQL 2008 / 2008 R2

##### Set the $dbaadminserver to where you have placed the DEMO_DBAInventory database

$dbaadminserver = "yourSQLserver"

$dbainventorydb = "DEMO_DBAInventory"

$sqlcommand_pull_instance_list = "SELECT [instance_name] FROM [dbo].[instances]"

$instances = Invoke-Sqlcmd -DisableVariables -QueryTimeout 900 -ServerInstance $dbaadminserver -Database $dbainventorydb -Query $sqlcommand_pull_instance_list

#uncomment the next like to see the list of instances that was pulled back by the query

#$instances

#loop through each instance and execute the SSIS package

foreach ($instance in $instances) {

#the path to the dtexec command may be different on your server. Powershell is not very friendly to folder names with spaces, so the insertion

#of char(34) puts in quotes to help resolve the path

#this line will create the command to pass the instance name from the instance loaded in the loop to the package variable instance_name

$scriptcommand = " & C:\$([Char]34)Program Files$([Char]34)\$([Char]34)Microsoft SQL Server$([Char]34)\110\DTS\Binn\dtexec /FILE $([Char]34)C:\temp\Method2.dtsx$([Char]34) /SET $([Char]34)\Package.Variables[instance_name].Value;$($instance.instance_name)$([Char]34)"

try

{

#initiate the powershell job for the loaded instance, should continue through to start jobs for all instances

Start-Job -ScriptBlock { param($p_command); Invoke-Expression $p_command } -ArgumentList $scriptcommand

}

catch { [system.exception] "Failed to Pull from $instance" | Out-File "C:\temp\method2_catch_errors.txt" -Width 200 -Append}

finally {write-host "Finally $instance"}

#$instance.instancename

} #foreach ($instance in $instances)

#this will give each job 3 minutes to execute. If it runs beyond 3 minutes, it is presumed hung and will allow powershell to quit the job and

#prevent memory "leaks".

#Also, when you schedule this as a SQL Agent job, you may want to know that the data collection is complete before proceeding to the next step in your

#job.

get-job | wait-job -Timeout 180;

Pros to this method:

- The primary “pro” to this method is the ability to kick off multiple streams of the “foreach” content via Powershell’s Start-Job function. To illustrate this:

- Powershell jobs, like SQL Agent jobs, can run in parallel. If you had an SSIS package that took 2 minutes to run, and 1000 instances to run that against, that would be 2000 minutes, ~33 hours Each powershell job takes about 5 seconds to get started.

- At 5 seconds to start each job, it might still take a little more than 1 hour and 20 minutes to get all of the jobs started, it is reasonable to think that they should all complete within 10 minutes of the last job starting. From 33 hours down to 1.5 hours is an enormous time improvement.

- Can easily swap the instance query from the SQL Agent job step properties or ps1 script in a text editor without having to open Visual Studio to edit the package (or edit XML)

- Package design really only contains what you wish to execute on every instance.

- The benefit to this is that you can add pre and post tasks to the agent job without having to manipulate the SSIS package

Cons to this method:

- Knowledge of powershell scripting and getting familiar with how powershell parses variables and values from arrays.

- Package design really only contains what you wish to execute on every instance.

- The con of this is that it might be cleaner to have all pre and post tasks contained within one package.

- You will have a large number of powershell/dtexec commands taxing your system as the jobs run in parallel within the powershell script.

Using the demo scripts and packages

*The SSIS packages were built in Visual Studio 2012 using SQL Server Data Tools for Business Intelligence for VS 2012. This was used and tested on a SQL Sever 2012 environment running Powershell v2.

- Create the demo databases using script 01_create_dbs_and_tables.sql

- Open and edit script 02_populate_instance_inventory.sql to load a list of instances in your environment.

- Copy the SSIS packages to C:\temp\ directory.

- Method1.dtsx

- Method2.dtsx

- Method2.ps1

- Edit the Method1.dtsx Connection Managers to the SQL Server where you executed 01_create_dbs_and_tables.sql.

- DEMO_DBAInventory

- DEMO_DBARepository

- Instance_Connection

- Edit the method2.ps1 file

- Set the $dbaadminserver value to the name of your SQL Server where the DEMO_DBAInventory exists.

- Verify the path to dtexec. The $scriptcommand is set to C:\Program Files\Microsoft SQL Server\110\DTS\Binn\dtexec in my script. Your path may be different.

- Deploy the SQL Agent Jobs using the script 03_deploy_sql_agent_jobs.sql

Taking it to the next level

- Obviously, error handling could be added to both the powershell script as well as the SSIS packages. For simplicity, I left that out of the demo.

- Powershell v3 offers the ability to do a foreach loop in parallel which could potentially be used to speed this up even further.

- You could also split your instance list up logically and execute multiple powershell scripts for each data set (ie prod and non-prod powershell files).

- Load in DMV, config, backup, and whatever else you can think of to a central repository for reporting.

Closing Remarks

There are many other methods out there to gather data from multiple instances, but the two methods that I showed today hopefully give you some insight into how powerful SSIS and powershell can be when combined. Method 1 showed a single package to linearly collect data and store the entire ETL process in a single control flow. Method 2 took the “foreach” contents from method 1 and moved the looping through instances into a powershell foreach loop containing Start-Job commands that was intended to help speed up processing across many instances. I hope you’re able to leverage some of these tasks and components in your environments.